Azure SQL Database is part of a strong, and strengthening overall Data story in Microsoft Azure. The flexibility of the platform, the scalability, both up and out, and the fact that so much functionality is just included all combine to show Azure SQL Database as a great offering. One thing that has been missing that the on-prem (or Azure VM) world continues to enjoy is SQL Server Agent. SQL Agent has long been used for the scheduling and execution of database maintenance tasks relating to indexes, statistics, and on and on. You’ve certainly been able to perform tasks against Azure SQL using PowerShell, Azure Automation, etc. But that familiar ability to schedule the execution of plain old TSQL using plain old TSQL just wasn’t there.

Debra Dove, Principal Program Manager on the SQL Engineering team at Microsoft, announced at Ignite 2016 in September that a shiny NEW Elastic Database Jobs was entering Private Preview. Note that there is an earlier version of Elastic Database Jobs currently in public preview in the Azure Portal. This new version I am showing today will be the path going forward and will replace that current version in the portal. The NEW Elastic Jobs does not include a Portal experience at the time of this writing.

The new Elastic Database Jobs are designed to echo well the functionality the folks working with SQL Server are accustomed to on-prem with SQL Agent. But it’s even better than that. There are many features that are just baked in that you no longer have to worry about. I’ve presented on the new Elastic Jobs as part of a larger presentation on the overall Elastic tools associated with Azure SQL Database a handful of times. That presentation is called Azure SQL Database Elastic Boogie and references Marcia Griffith’s hit song Electric Boogie (The Electric Slide). Yeah. I know. That will explain the use of the word boogie all over the place.

The new Elastic Database Jobs are designed to echo well the functionality the folks working with SQL Server are accustomed to on-prem with SQL Agent. But it’s even better than that. There are many features that are just baked in that you no longer have to worry about. I’ve presented on the new Elastic Jobs as part of a larger presentation on the overall Elastic tools associated with Azure SQL Database a handful of times. That presentation is called Azure SQL Database Elastic Boogie and references Marcia Griffith’s hit song Electric Boogie (The Electric Slide). Yeah. I know. That will explain the use of the word boogie all over the place.

Even with it just being a very new private preview, my experience has been a great one. Huge kudos to Debra and her team on that.

Note: There are several offerings around Azure SQL Database that include the word “Elastic” in their name: Elastic Database Pools, Elastic Database Query, the Elastic Scale Client .Net library, and Elastic Database Jobs. They all include “Elastic” in order to note how they help you with flexibility in a scaled out/up data tier in Azure SQL Database. You CAN use them all together; it often makes sense to do so. But none of them are requirements for the others. For example, your databases DO NOT need to be in Elastic Database Pools to take advantage of Elastic Database Query, the Elastic Scale Client, or the NEW Elastic Database Jobs.

If you would like to participate in the Private Preview, then feel free to skip down to the Requesting Private Preview Access section at the bottom. I will understand if you want to bypass the transcendent experience of my walkthrough… I guess…

Let’s dig in.

High Level Features of Elastic Database Jobs

1. Asynchronous execution of scripts

This is not meant for interactive querying. You can use Elastic Database Query (currently in public preview) for that. The scripts in your jobs will execute in the background against each target database.

2. Dynamic resolution of target databases

Elastic Database Jobs introduces a feature called a Target Group. When you create a job step, you specify the Target Group for which the job step should execute. Target groups can contain “include” or “exclude” references to Azure SQL Database Servers, Databases, and Elastic Database Pools. When using a Server or a Pool, the targets will be the Databases within those Servers or Pools. Since you can specifically create “exclude” entries in Target Groups, this lets you run your job against, for example, all databases on Server01 EXCEPT DatabaseA.

When the job runs, it starts by querying the entries in the Target Group in order to determine which databases should be targeted. It does this with every execution to make sure the list of targets is accurate at that moment. This is a fantastic feature. In the future, the team plans to allow you to target Resource Groups and Tags as well. I KNOW! RIGHT?! So cool.

Give or take certain special cases around data sovereignty (like Germany and China, for examples), you can execute jobs against databases in any Azure region using a single Elastic Jobs account.

3. Management using TSQL

Currently, during the private preview, you can only manage Jobs using TSQL. However, in the future, they plan to add ability to do so via REST APIs, PowerShell, and the Portal. REMINDER: The Elastic Database Jobs available in the Azure Portal at the time of this writing is the older version, not this new version.

4. Script execution guarantees

The Elastic Jobs engine guarantees “at least once” execution against all target databases. So, if a connection to a particular target databases fails, the engine itself includes configurable retry logic to keep making attempts to connect to that database to execute the job script.

The engine also guarantees that each job will only support one concurrent execution at a time. Suppose you have a job that typically executes in 30 seconds and you run that job every five minutes. If the execution of that job against DatabaseA is taking longer than expected and it still running when five minutes passes and the next execution should begin, the engine will skip DatabaseA and make note in the execution history that a previous execution is still running.

5. Reliable collection of query results to a single table (no duplicates)

You can execute a job that will query all the target databases and bring the results back to a single table for use in reporting, etc. Even if that job must retry against a particular database, it will ensure that no duplicates from multiple attempts are saved to the final destination table. Hereafter, I will refer to the database you use for this purpose as the Jobs Results DB. You can write to a database you use for other purposes as well. I just keep mine dedicated for organizational purposes.

This is a fantastic way to pull information from a scaled out data tier into a central repository for reporting and analytics scenarios.

A Quick Note About Scale

We have teams within Microsoft that rely on this technology to stay within strict reporting SLAs. Those teams have a large infrastructure they use with Azure to manage their business. The Elastic abilities within Azure SQL Database in general and Elastic Database Jobs in particular serve them well. We also have a customer using Elastic Database Jobs to manage a data tier of more than 130,000 Azure SQL Databases. This technology is HUGE for them.

Elastic Jobs Management Database

Once the private preview is activated for your Azure subscription (I will cover this at the end), you will execute some PowerShell to create an Azure SQL Database for the orchestration of Elastic Database Jobs. You can liken this to the role that the msdb system database plays in the on-prem SQL Server world.

This database must be an S2 (currently $75/month) or higher. So, if you want to play with this using Azure credits from an MSDN subscription, you will likely want to create this only when you will be working on it and destroy it when you are done. For the private preview, that is the only cost you really have. You pay for the database, and that’s it. I don’t have information to share on what the pricing will look like at general availability.

Note that you do not need to have the management database, which I will hereafter refer to as the Jobs DB, on a dedicated server. I just chose to do so in my own environment for organizational purposes.

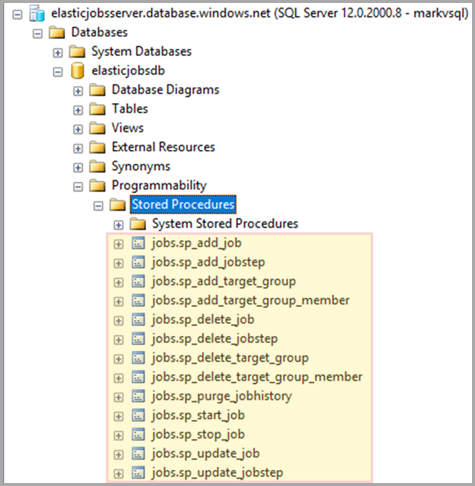

In the Jobs DB you will notice some familiar stored procedures, as shown in my Jobs DB in Figure 1.

Figure 1

In Figure 1, you can see sp_add_job, sp_add_job_step, etc., just like in msdb. You use these stored procedures for creating and managing your jobs, job steps, etc.

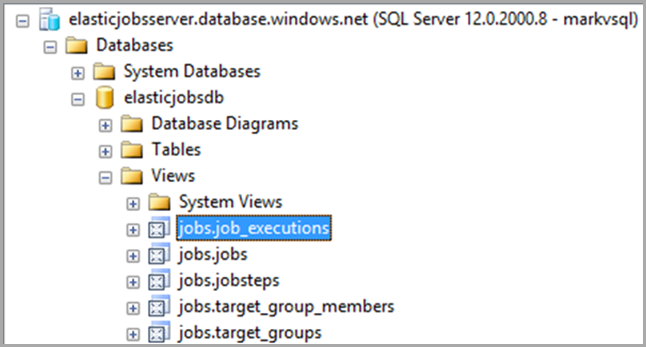

This database also contains a view you can use for querying the history of job executions, aptly named job_executions. You can see this along with some other useful views in Figure 2.

Figure 2

Security Setup

For Elastic Jobs to work properly, there are some credentials, logins, and users that must be created.

First, in the context of the Job DB, you need to create a Database Master Key and two database scoped credentials.

-- Create a db master key using your own password.

CREATE MASTER KEY ENCRYPTION BY PASSWORD='NOT 12345';

GO

-- Create a database scoped credential for job execution.

CREATE DATABASE SCOPED CREDENTIAL elasticjobcred

WITH IDENTITY = 'jobcred', SECRET = 'NOT 12345';

GO

-- Create a database scoped credential for the master database of servers.

CREATE DATABASE SCOPED CREDENTIAL elasticmastercred

WITH IDENTITY = 'mastercred', SECRET = 'NOT 12345';

GO

/* EACH TARGET SERVER (including server to house Jobs Results DB */

/* master database */

CREATE LOGIN jobcred

WITH PASSWORD = 'same password used for jobcred secret';

GO

CREATE LOGIN mastercred

WITH PASSWORD = 'same password used for mastercred secret';

GO

CREATE USER mastercred

FOR LOGIN mastercred

WITH DEFAULT_SCHEMA = dbo;

GO

/* each target database (including Jobs Results DB) */

CREATE USER jobcred

FOR LOGIN jobcred

WITH DEFAULT_SCHEMA = dbo;

GO

/* Add user to the database appropriate role(s) */

EXEC sp_addrolemember N'db_owner', N'jobcred';

GO

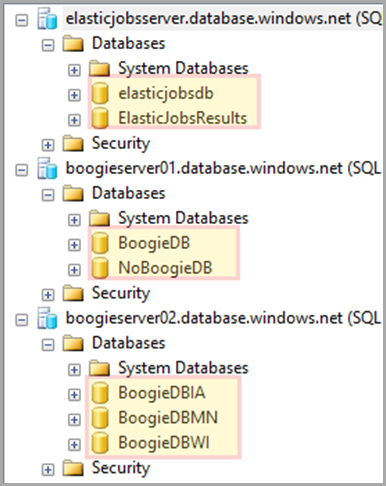

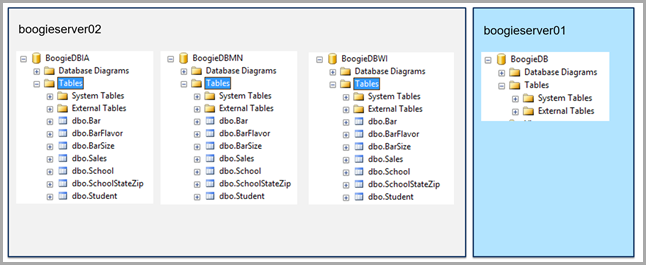

My Environment

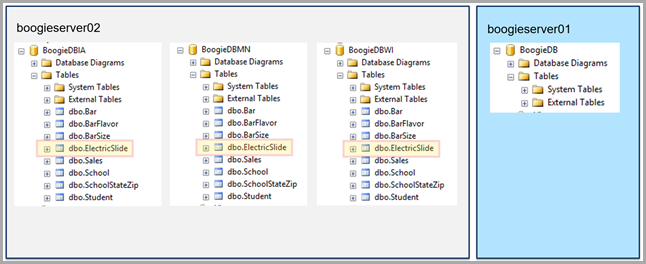

I think it makes sense to quickly show the environment I use for this demo, which is shown in Figure 3.

Figure 3

The Schema Update Walkthrough

-- Add a target group containing server(s)

EXEC jobs.sp_add_target_group 'BoogieServerGroup';

-- Add a server target member

EXEC jobs.sp_add_target_group_member

'BoogieServerGroup'

, @target_type = 'SqlServer'

, @refresh_credential_name='elasticmastercred'

, @server_name='boogieserver02.database.windows.net';

-- View members of target group

SELECT

target_group_name

, membership_type

, target_type

, server_name

FROM jobs.target_group_members

WHERE target_group_name='BoogieServerGroup';

-- Add job for create table

EXEC jobs.sp_add_job @job_name='CreateTableElectricSlide'

, @description='Create Table ElectricSlide';

-- Add job step for create table

EXEC jobs.sp_add_jobstep

@job_name='CreateTableElectricSlide'

, @command = N'IF NOT EXISTS (SELECT * FROM sys.tables

WHERE object_id = object_id(''ElectricSlide''))

CREATE TABLE [dbo].[ElectricSlide]([ElectricSlideId] [int] NOT NULL);'

, @credential_name= 'elasticjobcred'

, @target_group_name='BoogieServerGroup'

-- View the job step definition

SELECT

[job_name]

, [step_id]

, [command_type]

, [command]

, [credential_name]

, [target_group_name]

FROM [jobs].[jobsteps]

WHERE job_name = 'CreateTableElectricSlide';

-- Execute the latest version of a job

exec jobs.sp_start_job 'CreateTableElectricSlide'

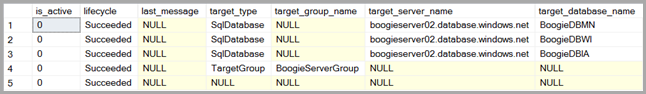

-- View latest execution

SELECT

is_active

, lifecycle

, last_message

, target_type

, target_group_name

, target_server_name

, target_database_name

FROM jobs.job_executions

WHERE job_name = 'CreateTableElectricSlide'

AND job_execution_id =

(

SELECT job_execution_id FROM jobs.job_executions

WHERE step_id IS NULL and create_time =

(

SELECT MAX(create_time)

FROM jobs.job_executions WHERE step_id IS NULL

)

)

ORDER BY start_time DESC;

GO

-- Add a database target member

EXEC jobs.sp_add_target_group_member

'BoogieServerGroup'

, @target_type = 'SqlDatabase'

, @server_name='boogieserver01.database.windows.net'

, @database_name='BoogieDB'

--View the recently added database in target group

SELECT

target_group_name

, membership_type

, target_type

, server_name

, [database_name]

FROM jobs.target_group_members

WHERE target_group_name='BoogieServerGroup';

-- Execute the latest version of a job

exec jobs.sp_start_job 'CreateTableElectricSlide';

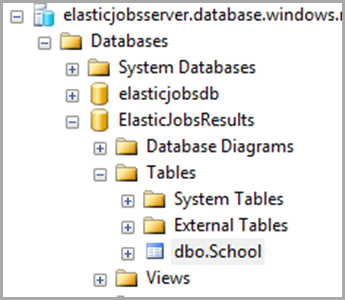

Query Results Walkthrough

-- Remove a server target member

DECLARE @mytarget_id uniqueidentifier

SET @mytarget_id =

(

SELECT target_id FROM jobs.target_group_members

WHERE [database_name] = 'BoogieDB'

);

EXEC jobs.sp_delete_target_group_member

@target_group_name = 'BoogieServerGroup'

, @target_id = @mytarget_id;

-- Add a job to collect results

EXEC jobs.sp_add_job

@job_name ='ResultsJob'

, @description='Collection data from all databases';

-- Add a job step to collect results

EXEC jobs.sp_add_jobstep

@job_name='ResultsJob',

@command= N'SELECT DB_NAME() AS DatabaseName, SchoolID, SchoolName FROM dbo.School;',

@credential_name='elasticjobcred',

@target_group_name='BoogieServerGroup',

@output_type='SqlDatabase',

@output_credential_name='elasticjobcred',

@output_server_name='elasticjobsserver.database.windows.net',

@output_database_name='ElasticJobsResults',

@output_schema_name='dbo',

@output_table_name='School';

-- Execute the latest version of a job

exec jobs.sp_start_job 'ResultsJob';

-- View latest execution

SELECT

is_active

, lifecycle

, last_message

, target_type

, target_group_name

, target_server_name

, target_database_name

FROM jobs.job_executions

WHERE job_name = 'ResultsJob'

AND job_execution_id =

(

SELECT job_execution_id FROM jobs.job_executions

WHERE step_id IS NULL and create_time =

(

SELECT MAX(create_time)

FROM jobs.job_executions WHERE step_id IS NULL

)

)

ORDER BY start_time DESC;

GO

SELECT * FROM dbo.School;

You must be logged in to post a comment.