Fans of Dr. Who will immediately recognize the title of this post. In that classic science fiction series, the Doctor has a very special vessel that lets him travel anywhere in Time and Space, even across dimensions. That vessel is called the T.A.R.D.I.S. (Time And Relative Dimension In Space). As I was crafting the idea for this post, paying homage to Dr. Who just seemed like the perfect hook.

Fans of Dr. Who will immediately recognize the title of this post. In that classic science fiction series, the Doctor has a very special vessel that lets him travel anywhere in Time and Space, even across dimensions. That vessel is called the T.A.R.D.I.S. (Time And Relative Dimension In Space). As I was crafting the idea for this post, paying homage to Dr. Who just seemed like the perfect hook.

At my current client, I have been working on an ETL solution. It involves automating the execution of about 70 unique stored procedures, each of which populate their own output table, written by another team and then consolidating all of that data into a dimensional model in a data warehouse. Many of these stored procedures do quite a bit of work and take several minutes to execute. Some run more quickly. Each of these procedures is independent of the others, so I am able to to run any of them in parallel with each other without affecting the results. This, will, however, affect server resources, so running all 70 at once would be a great way tick off the DBA. However, running them serially pretty much guarantees that I will exceed the 3 hour window in which my process must execute.

I decided to start by assigning each procedure to one of five processing silos, which I called Process Groups. I made these assignments in a table to allow for total control and tuning for best results. For example, if one of the procedures took far longer than the others, I could give that a Process Group all to itself and divide the others evenly across the remaining Process Groups. I actually created a procedure to automate that, but I will keep that for another post. For this post, I chose to just do three silos instead of five.

Note: I have included the SSIS solution as well as script that creates all necessary database objects and rows right here. I will only show code that directly impacts what I am trying to show here. Also, I am using SSIS 2014 for this post. At my client, I used SSIS 2012. While I am using a task that was introduced in SSIS 2012, namely the Expression Task, you can accomplish the same thing using a Script Task in prior versions of SSIS. So, I see no reason this could not work on 2008 R2 (and possibly earlier).

The TARDIS Child Package

I created a table called StoredProcedure with the following rows shown in Figure .

Figure 1

The purpose of this table is to control the Process Group to which each stored procedure belongs. I also created each of those stored procedures. They each insert the Process Group value passed into them into their respective tables.

CREATE TABLE dbo.Table1

(

ProcessGroup tinyint

, RecordCreateDate datetime2 DEFAULT CONVERT(datetime2,GetDate())

);

I created Table1 thru Table6 with this exact same pattern.

My child package is pretty simple, as shown in Figure 2.

Figure 2

Figure 3 show the Variables in the package.

Figure 3

The first step, marked with the 1 in Figure 2, is an Execute SQL Task that calls the GetStoredProcedureProcessGroup stored procedure which is shown below.

CREATE PROCEDURE dbo.GetStoredProcedureProcessGroup

@ProcessGroup tinyint

AS

SELECT

ProcedureName

FROM dbo.StoredProcedure

WHERE ProcessGroup = @ProcessGroup;

GO

The task is configured to pass the value of the User::ProcessGroup variable as the @ProcessGroup parameter for the stored procedure, as shown in Figures 4 and 5.

Figure 4

Figure 5

Just to show how brilliantly this procedure works, let’s execute it.

EXEC dbo.GetStoredProcedureProcessGroup @ProcessGroup = 1

It produces the following results shown in Figure 6.

Figure 6

Hold your applause, please. Feel free to check this against Figure 1 to make sure these are the procedures configured for Process Group 1. We’ll wait.

We good? Cool.

When these results get returned to the package, they are stored in User::StoredProcedureList variable, which is of the System.Object data type.

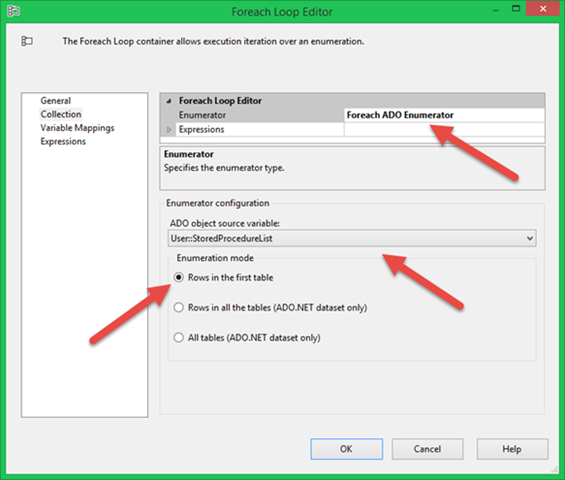

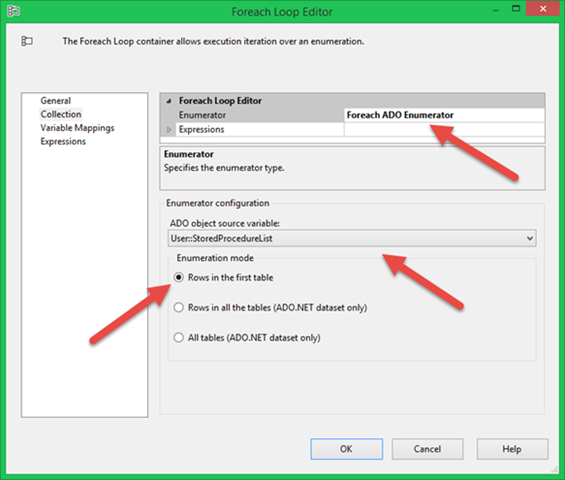

The next step in the package, marked with the 2 in Figure 2, is a For Each Loop that iterates through each row residing in the User::StoredProcedureList variable. That configuration is shown in Figures 7 and 8.

Figure 7

Figure 8

The first time through the loop, the User::StoredProcedure package variable will get the value ‘dbo.InsTable1’ which is the first row shown in Figure 6.

Package execution then moves on to the “SQL Execute Stored Procedure For Process Group” Execute SQL Task, shown by the 3 in Figure 2. This task calls the ExecuteSingleStoredProcedure stored procedure, which is shown below.

CREATE PROCEDURE dbo.ExecuteSingleStoredProcedure

@StoredProcedure nvarchar(256)

, @ProcessGroup tinyint

AS

DECLARE @SQLString nvarchar(1000)

SET @SQLString = N'EXEC ' + @StoredProcedure

+ ' @ProcessGroup = ' + CONVERT(nchar(1),@ProcessGroup) + ';'

EXEC SP_EXECUTESQL @SQLString

;

GO

The configuration of the Execute SQL Task is shown in Figures 9 and 10.

Figure 9

Figure 10

The User::StoreProcedure variable was populated by the For Each Loop. The User::ProcessGroup variable will be passed from the Parent Package. For now, if we assume that value is 1, we end up with the following stored procedure call passing to the database:

EXECUTE dbo.ExecuteSingleStoredProcedure

@StoredProcedure = N'dbo.InsTable1'

, @ProcessGroup = 1;

This will insert a single row into dbo.Table1.

Once that execution is complete, the For Each Loop starts over again for the next row in the User::StoredProcedureList variable, the record for dbo.InsTable4, and the following stored procedure call pass to the database:

EXECUTE dbo.ExecuteSingleStoredProcedure

@StoredProcedure = N'dbo.InsTable4'

, @ProcessGroup = 1;

Once that exection is complete, the For Each Loop detects there are no more records in the User::StoredProcedureList variable, and it finishes its work, reporting Success.

To see the resulting effect on the tables, we can run the following query:

SELECT 'Table1' AS TableName, ProcessGroup, RecordCreateDate FROM dbo.Table1

UNION ALL

SELECT 'Table2'AS TableName, ProcessGroup, RecordCreateDate FROM dbo.Table2

UNION ALL

SELECT 'Table3'AS TableName, ProcessGroup, RecordCreateDate FROM dbo.Table3

UNION ALL

SELECT 'Table4'AS TableName, ProcessGroup, RecordCreateDate FROM dbo.Table4

UNION ALL

SELECT 'Table5'AS TableName, ProcessGroup, RecordCreateDate FROM dbo.Table5

UNION ALL

SELECT 'Table6'AS TableName, ProcessGroup, RecordCreateDate FROM dbo.Table6

ORDER BY RecordCreateDate

This produces the results shown in Figure 11.

Figure 11

Since the stored procedures dbo.InsTable1 and dbo.InsTable4 were run serially, with dbo.InsTable1 going first, the RecordCreateDate for Table1 is earlier than that of Table4. Later, when we run all process groups, the RecordCreateDate will be the proof that the TARDIS solution works. The reason is that of the stored procedures, InsTable1 thru InsTable6, four of them have some manner of delay in their processing. For example, InsTable1 has the following definition:

CREATE PROCEDURE dbo.InsTable1

@ProcessGroup tinyint

AS

WAITFOR DELAY'00:00:20';

INSERT INTO dbo.Table1 (ProcessGroup) VALUES(@ProcessGroup);

GO

Here I use the WAITFOR to cause execution to simply pause. In the case of InsTable1, I am pausing for 20 seconds. I took this approach just for this post in order to prove this solution works. InsTable1 will actually be the first procedure to get called. I put in the delay to make sure it finishes AFTER some procedures that are called later. With serial processing, this would be impossible.

I mentioned that the TARDIS Child package would get the value of the User::ProcessGroup variable from the TARDIS Parent package. This is accomplished via a Parent Package Variable configuration, as shown in Figure 12.

Figure 12

When the TARDIS Parent package tells the TARDIS Child package to execute, the TARDIS Child will look at the User::ProcessGroup variable on TARDIS Parent and take that value for its own User::ProcessGroup variable. The fact that the User::ProcessGroup variable of TARDIS Parent can only have a single value (at a time) meant I needed a way for each execution of TARDIS Child to get a different value. My solution ended up being pretty timey wimey.

The TARDIS Parent Package

This is where it gets really fun. First, I will just show you the TARDIS Parent package in Figure 13. Note that the numbers in Figure 13 denote the relative order in which I will discuss the tasks, not necessarily the order of execution.

Figure 13

Step 1 merely involves truncating all of the output tables, Table1 thru Table6. Nothing to see here.

In Step 2, I use the Expression Task to set the value of the User::ProcessGroup variable to 1. The configuration of that task is shown in Figure 14.

Figure 14

There isn’t really anything fancy about this. That said, I like the Expression Task. 🙂

Step 3 is the first Execute Package task calling TARDIS Child. Thanks to the Parent Package Variable configuration, TARDIS Child will get the value 1 for its User::ProcessGroup variable. The only setting I made here was in setting the connection manager to the TARDIS Child.dtsx file. That connection manager is shown in Figure 15.

Figure 15

The Execute Package Tasks in Steps 3, 6, and 9 all use the same connection manager. Therefore, they all execute the same child package.

Step 4 is where the coolness comes in. When I originally started trying to solve this problem, I was attempting to come up with a way to have a single variable pass three different values to the child package. Then it hit me. There is NO reason I have to call the child package multiple times at EXACTLY the same moment. Like the TARDIS, it can exist in multiple dimensions, or time signatures, simultaneously. Therefore, all I really had to do was wait a bit, then change the value of the User::ProcessGroup variable before calling the next incarnation of the child package. Boom. This is accomplished using a Script Task. I got the code I needed for it from Mike Davis (Blog | Twitter) in this post on BIDN. The code for it is very simple, as shown in Figure 16.

Figure 16

The Step A in the script adds the System.Threading to the references. This enables the use of the System.Threading.Thread.Sleep() method, which will essentially pause for the number of milliseconds provided. In this case, I am pausing 5000 milliseconds (5 seconds).

Once the wait is over, Step 5 of the TARDIS Parent package can change the value of the User::ProcessGroup variable to 2.

Step 6 then calls the TARDIS Child package a second time. But this time, then TARDIS Child gets a value of 2 for its User::ProcessGroup variable. As of that moment, TARDIS Child now essentially exists twice in Time and Space, just slightly offset.

Steps 7 thru 9 perform this same process, waiting 10 seconds instead of 5.

The wait is the key. What is also vital is that I do NOT have any precedence constraints between the separate calls of TARDIS Child. Thus, the second execution of TARDIS Child does not wait for the first. Nor does the third execution wait for the first or second. When the Sequence Container starts executing, the steps labeled 2, 4, and 7 all start at exactly the same time. This is shown in Figure 17, which took me several tries to capture properly.

Figure 17

The Tasks shown by the arrows all start at exactly the same time. Those waits in SCR Wait 5 Seconds and SCR Wait 10 Seconds cause my three executions of TARDIS Child to happen 5 seconds apart. Figure 18 was taken later in the execution.

Figure 18

Here you can see that, although the executions for Process Group 1 and Process Group 2 started before that of Process Group 3, the parallel nature of this solution meant that Process Group 3 could still finish first as it is independent of the other Process Groups. We can confirm this by querying the tables with the query we used earlier, ordering the results by RecordCreateDate.

SELECT 'Table1' AS TableName, ProcessGroup, RecordCreateDate FROM dbo.Table1

UNION ALL

SELECT 'Table2'AS TableName, ProcessGroup, RecordCreateDate FROM dbo.Table2

UNION ALL

SELECT 'Table3'AS TableName, ProcessGroup, RecordCreateDate FROM dbo.Table3

UNION ALL

SELECT 'Table4'AS TableName, ProcessGroup, RecordCreateDate FROM dbo.Table4

UNION ALL

SELECT 'Table5'AS TableName, ProcessGroup, RecordCreateDate FROM dbo.Table5

UNION ALL

SELECT 'Table6'AS TableName, ProcessGroup, RecordCreateDate FROM dbo.Table6

ORDER BY RecordCreateDate

This returns the results shown in Figure 19.

Figure 19

Note the ProcessGroup column. This proves that, although the TARDIS Child package for Process Group 3 was executed last, it was able to finish first.

There you have it. I hope this can give you some ideas if you are ever in a similar situation. Bow ties are cool.

You must be logged in to post a comment.